Jul 30, 2014

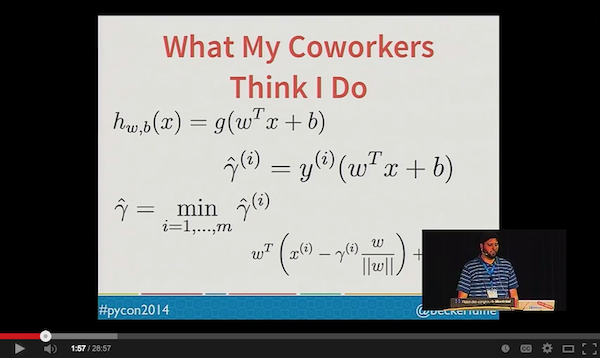

This is the first post in a multi-part series wherein I will explain the details surrounding the language prediction model I presented in my Pycon 2014 talk. If you make it all the way through, you will learn how to create and deploy a language prediction model of your own.

Realtime predictive analytics using scikit-learn & RabbitMQ

OSEMN

I’m not sure if Hilary Mason originally coined the term OSEMN, but I certainly learned it from her. OSEMN (pronounced awesome) is a typical data science process that is followed by many data scientists. OSEMN stands for Obtain, Scrub, Explore, Model, and iNterpret. As Hilary put it in a blog post on the subject: “Different data scientists have different levels of expertise with each of these 5 areas, but ideally a data scientist should be at home with them all.” As a common data science process, this is a great start, but sometimes this isn’t enough. If you want to make your model a critical piece of your application, you must also make it accessible and performant. For this reason, I’ll also discuss two more steps, Deploy and Scale.

Obtain & Scrub

In this post, I’ll cover how I obtained and scrubbed the training data for the predictive algorithm in my talk. For those who didn’t have a chance to watch my talk, I used data from Wikipedia to train a predictive algorithm to predict the language of some text. We use this algorithm at the company I work for to partition user generated content for further processing and analysis.

Pagecounts

So step 1 is obtaining a dataset we can use to train a predictive model. My friend Rob recommended I use Wikipedia for this, so I decided to try it out. There are a few datasets extracted from Wikipedia obtainable online at the time of this writing. Otherwise you need to generate the dataset yourself, which is what I did. I grabbed hourly page views per article for the past 5 months from dumps.wikimedia.org. I wrote some Python scripts to aggregate these counts and dump the top 50,000 articles from each language.

Export bot

After this, I wrote an insanely simple bot to execute queries against the Wikipedia Special:Export page. Originally, I was considering using scrapy for this since I’ve been looking for an excuse to use it. A quick read through of the tutorial left me feeling like scrapy was overkill for my problem. I decided a simple bot would be more appropriate. I was inspecting the fields of the web-form for the Special:Export page using Chrome Developer Tools when I stumbled upon a pretty cool trick. Under the “Network” tab, if you ctrl click on a request, you can use “Copy as cURL” to get a curl command that will reproduce the exact request made by the Chrome browser (headers, User-Agent and all). This makes it easy to write a simple bot that just interacts with a single web-form. The bot code looks a little something like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

The final version of my bot splits the list of articles into small chunks since the Special:Export page throws 503 errors when the requests are too large.

Convert to plain text

The Special:Export page on Wikipedia returns an XML file that contains the page contents and other pieces of information. The page contents include wiki markup, which for my purposes are not useful. I needed to scrub the Wikipedia markup to convert the pages to plain text. Fortunately, I found a tool that already does this. There was one downside to this tool which is that it produces output in a format that looks strikingly similar to XML, but is not actually valid XML. To address this, I wrote a simple parser using a regex that looks something like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

This function will read every page in filename and return a dictionary with the id (an integer tracking the version of the page), the url (a permanent link to this version of the page), the title, and the plain text content of the page. Going through the file one article at a time, and using the yield keyword makes this function a generator which means that it will be more memory efficient.

What’s next?

In my next post I will cover the explore step using some of Python’s state-of-the-art tools for data manipulation and visualization. You’ll also get your first taste of scikit-learn, my machine learning library of choice. If you have any questions or comments, please post them below!